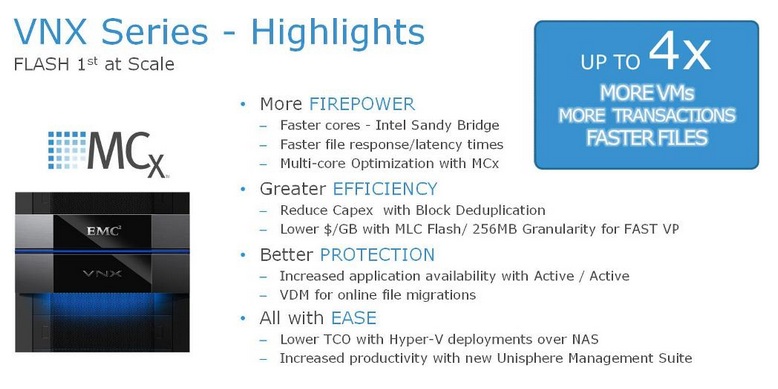

A mid-range storage revolution, a new era started in September 2013, introduction of VNX-2 Multicore systems, brought many improvements in product features . With Multicore architecture below are the highlights of software/hardware improvements.

Here I am talking about one of the software feature which got some serious enhancements, the FAST Cache, with MCx it is Multicore FAST Cache.

Improved performance and TCO

With the new architecture, it brought great advancements in performance for various applications . For applications with unpredictable change in IO activity and frequent accesses, Multicore FAST Cache shown great improvements in performance. Also, customers can have FAST cache ranges from 100 GB to 4.2 TB now. Efficient use of Multicore FAST Cache enables the customer to have a lower TCO (Total Cost of Ownership) by having better performance ($/IOPS) with lower cost. Multicore FAST Cache increased the efficiency in use of FLASH drives in comparison to the legacy configurations.

Here you can find a great post from Sam Lucido which shows the comparison in performace between legacy FAST cache and Multicore FAST cache.

Operations

Operations of Multicore FAST Cache is much similar to the legacy FAST Cache. As in legacy one, all the traditional LUNs and Thin Pools created after the FAST Cache creation will be FAST Cache enabled by default. We will have to manually enable the same for existing LUNs and Pools.

Data Promotion to FAST Cache

When the system identifies a 64KB block data is being accessed frequently, it promotes this block to FLASH based extreme performance Multicore FAST Cache. This is decided based on the number of accesses for that block in a specific short time period. Thus on subsequent accesses for the block, data will be accessed from the FAST Cache (FASTer drives ), which increases the IOPS for application accessing it.

Data already residing in FLASH drives (FLASH Pool LUNs/FLASH based traditional LUNs) will not get promoted to FAST Cache. Also small-block sequential I/O, very large sized I/Os, Zero filling requests and some high frequency request accesses etc… are handled by Multicore Cache and are not promoted to Multicore FAST Cache.

Read/Write Operations

Shown here is the architecture and data flow with Multicore FAST Cache. For host access to block , it first checks in Multicore Cache. If it is a hit, data will be served by Multicore Cache itself. But in case of a miss, it checks the memory map for Multicore FAST Cache. If the data is present in FAST Cache, it will be served by the FAST Cache. In case of multiple misses for a data block, it will be considered for promotion as explained above. Data will be promoted and the memory map will be updated to make it available for future data accesses.

Multicore FAST Cache will be cleaned and flushed to make room for new and frequently accessed data blocks. If there are dirty pages (blocks not yet written to disk) in FAST Cache, these will be written to disk during minimal activity period. Once the dirty page is written to disk, it will be marked as clean page in FAST Cache. Flushing will be done to make free space in FAST Cache. This is done by flushing Least Recently Used (LRU) blocks to disk. This will make new promotions possible to the FAST Cache.

For FAST Cache configuration, I believe this post will be useful for you.